No time to read now?

-> Download the article as a handy pdf

List of contents

Purpose of Artificial Intelligence

What’s the point of AI and why is it wrong to ask businesses how they use it?

Dominik Hodbod | Dec 4, 2025

You will have heard AI is here to change how people work and if they work at all, fundamentally transforming the labor force. This is likely starting with white-collar jobs, and eventually making the contribution of humans to any productivity irrelevant.

And then, of course – with a bigger leap – you will have noted the apocalyptic scenario that human existence will one day be at the mercy of AI overlords.

Great many know this AI revolution narrative. Many accept its inevitability. But far too few stop to think why.

Within the constraints of a short blog piece, I try to take that pause and warn how we’re getting the “revolution” wrong already.

Limits of this post

In this post, I shall limit the discussion to the world of business. This time, it is also fairly general and not specific to pharmacovigilance, as I plan on looking at PV in more detail through the lens of this article later.

Further limiting the scope, I’ll steer clear from debating superintelligence and AGI. I will, in fact, even avoid discussing the larger AI impact on the nature of work and what that means for society in coming years (plenty of attention to this available elsewhere, some of it even in my own posts). I won’t even question the seemingly commonly accepted storyline of GenAI -> AI agents in firms -> no jobs -> AI overlords (it should be questioned, but not for me in this article, at least).

Side-note:

I will say, though, that the fact this storyline and those similar to it are often simply accepted with what I think is still insufficient proof of actually finding ourselves on that trajectory is worrying1.

But it is of course what hype is and, after all, it’s hard to resist the hype, so most just go along with it. One only needs to look at the massive build-out of AI infrastructure and related record valuations of “AI companies”, both public and private, many of these before turning profit, (and already ringing “AI bubble” alarm bells while at the same dismissing these as effectively a necessary part of progress) to be sure that, apparently, society has largely accepted2 the afore-mentioned storyline (questioning maybe just the last AGI step).

AI has been labelled as a transformative technology, compared to electricity or the internet (without either of which it would never exist) and, it would seem, there is no avoiding its impact on how we work now and in the future.

As I mentioned, I will not necessarily question the future of AI and whether the hype around it is justified.

It very well may be, and I myself believe that AI, as an evolution of older technologies3 and other non-technology innovations, has a strong potential to benefit society and it is very likely going to be transformative for many industries.

But more on that later.

What is the point of all this?

Amid all the unquestionable hype, I feel that people are not asking this question enough. Many can tell you why AI is going to transform pretty much everything. But few can tell you how exactly. And even fewer can show you what it really is that they like about AI so much. Among businesses, a truly limited number of people can explain what the purpose of AI in their company actually is.

I think the problem with hype – even when it’s justified – is that it becomes so pervasive we stop seeing the forest for the trees. And that I think is kind of what is happening today. We’ve somehow accepted that AI is rewriting the playbook, but, in many cases, we don’t really know why. Or at least, we can’t explain it.

Businesses have been coming up with AI policies and AI strategies and I’m guessing any firm – at least in the “information” sector – had to already answer the question: “How do you use AI?”

Not why or even if. It is just assumed you must somehow be using it without having to define the purpose of it. Some have even argued companies should appoint “chief AI officers”, clearly embedding a specific tool (AI) into the core structure of firms, no matter its objectives.

Why am I ranting about this?

History may one day tell us that, in 2025, we were at the beginning of the AI age.

We’re now at the start. And I think we’re already mostly getting it wrong. At least in the business world.

If you’ve found yourself promoting your company because “we use AI” or if you’ve found yourself writing an AI policy document, then my rant is mainly for you4.

When a company defines an AI strategy and basically says “we use AI” or “we look at ways to implement AI in our operations”, it is kind of like contracting developers for a house improvement project and telling the developers to use a nail gun, hammer, and a jigsaw, but not telling them what it is they’re building.

I’m sure that artificial intelligence will go through a series of philosophical debates on what it fundamentally is and how it differs from human intelligence, etc., so I won’t attempt it here. In the context of application across businesses, I don’t think we should think of AI as anything other than a tool. It is a tool that helps us achieve our goals5.

But, somehow, businesses now care more about the tools they use rather than what it is they use them for. In a sense, using AI as a tool has become the end itself rather than means to an end.

Why is that a problem?

First, when companies fail to define the purpose of applying a technology that is meant to be profoundly transformative, it is very similar to failing to define the purpose of the company itself (I’ve argued earlier how troubling this is, at least in our line of work). And companies without purpose will (or at least should) find it hard to continue their existence (here I see the broader worry of a bubble bursting).

Second, nobody knows what AI really is. The people who build it admit limitations to their knowledge of the very thing they’re building (or “growing”).

That may, of course, be natural to the technology itself and a core reason why it seems to be so unique (also one of the scarier things in the “AI overlord”/”end of the world” rhetoric6). Still, even in the sense of what is “knowable” about the technology, that knowledge seems to be limited to very few individuals globally, as evidenced by the eye-watering salaries of some AI engineers.

But a lot more broadly, and why I think this is a problem in our context, the vast majority of people, including business leaders, know very little about the technology.

And, yet, it is somehow acceptable that every company has to adopt it (“How do you use AI?”, “What’s your AI policy?”, “Who’s your CAIO”). So, most businesses have accepted a tool to be the defining feature of their future, but don’t really know what it is and why they’re using it.

Third, not many businesses can explain how exactly they use AI. This is, naturally, related to the problems of the missing what and why.

If you don’t define the purpose of it and what it actually is, describing the ways you implement it is all the more difficult. But that is what we largely get. Businesses are typically very vague about the ways of using the tool they spend so much time touting. Daily, I see laughable examples scrolling through my LinkedIn feed. And that is both a result and a contributing factor to the general lack of understanding and confusion about AI.

To use the earlier analogy, we decided to use a hammer for our house-improvement project. But, we don’t know what we’re building (why), we don’t know how to use the hammer (how), and we don’t even know what a hammer actually is (what).

But, somehow, we know that there is absolutely no way we can do anything without the hammer.

What to do?

Now, I know the previous analogy isn’t fair to all businesses. Many are good at defining the purpose of AI and how they’re applying it, even at framing what it is (or at least what it means to them). And, I’d suggest, it is those who are probably worth doing business with (when there is a choice).

So, a simple recipe I see here is for businesses to primarily be concerned about clear definitions of what AI means to them, why they use it, and how they implement it (preferably in lay language). I also think making people answer these questions is a good way to cherry pick among suppliers.

Equally important, we should think hard about the “peer pressure” of using AI at all. At the very least, anyone asking a business how they use it should first have clarity on their part of what they want it used for. I don’t think that happens very often. The number of RfIs I’ve seen from buyers asking about “how AI is used in your organization” without defining what for is too large to ignore.

In a lot of ways, this goes back to my earlier argument about the lack of philosophy and purpose of many companies; an issue much older than AI. Saying that you’re a market leader at something (with no evidence), is just not enough. If you can’t define what you do, then why does your firm exist?

Where I stand

For me, in a nutshell, rather than diving into developing your AI strategy or looking to fill “AI jobs”, it is far more important to know well what your business has set out to achieve, what the reason for that goal was, and what your plan on meeting the objective is. And, naturally, none of these have to include one specific tool.

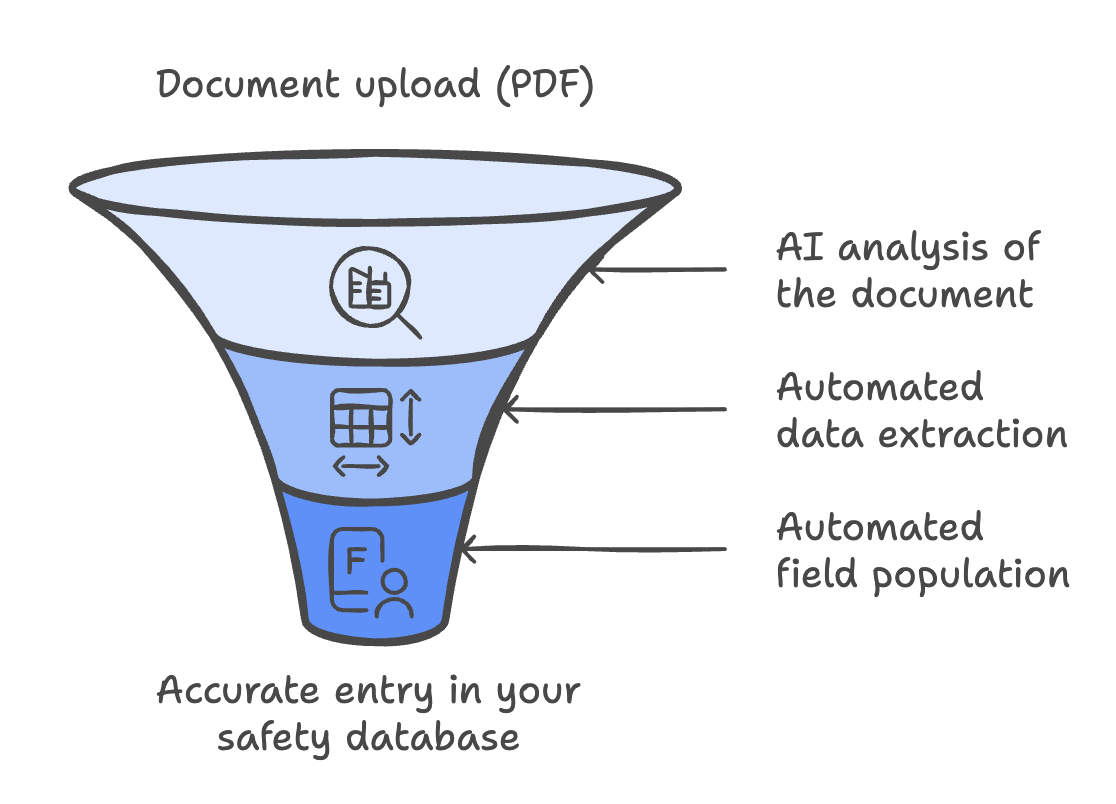

In many cases, I strongly believe the advances in automation prior to the leap in generative AI already go a long way in reducing effort behind mundane tasks, reducing costs, and offering better quality of service or products (typically really the core reasons for AI, however that is communicated), even in the “information” sector.

If AI can meaningfully contribute to those goals, then great. But it shouldn’t be assumed it always does in every business. In many cases, firms may not really see the return on investment (again, bubble concerns…)

At Tepsivo, we do use AI, and a lot. But we know clearly why, how, and what it is (in the PV context). The same way we have been crystal clear about our mission and objectives back in 2020 with unambiguous steps on how to achieve those goals, we have defined how AI as a tool serves as evolution of automation and helps us deliver on our goal to overhaul the still arcane pharmacovigilance industry.

But, let me save something for the next blog post, diving into this topic deeper specifically in the pharmacovigilance context. For now, you may refresh on Martti Ahtola’s take from 2022, already quelling AI hype back then, as well as some specific examples of AI in PV.

Did you like the article? Share with your network!

…or tell us your opinion.

That said, the anticipated impact on “junior” jobs seems to have taken place already (see related paper).

This may fit the narrative but alone shouldn’t be seen as a reason for the inevitability of the outlined AI journey.

Naturally, this applies as far as we can link society’s “acceptance” to global investors’ behaviour in public and private markets.

To what extent that connection is real is more of a question for economic historians and political philosophists. In today’s capitalist system, I feel safe to connect the behaviour of markets to larger societal trends.

Food for thought is offered by an AI sceptic, Emily Bender who, in an FT journalist interpretation claims that AI is just “automation in a shiny wrapper”.

In some regulated industries, you may actually have to write an AI policy. The documentation requirements of the heavily regulated pharma industry are very well known to us.

Still, if you’re writing an AI policy, ask yourself if there is a reason to do it aside from being required to.

More worryingly, if we accept it is more than a tool (more in the sense of the “AI overlord” line of argument), we’re then falling extremely short in the adoption.

The only tenable way to coexist with “superintelligence” or even something that replaces jobs at large (which it likely does already), we need to come with substantial changes to the societal structure.

I won’t go deeper there in this post, but it suffices to say that in that context, we’re getting it terribly wrong.

Elizier Yudkowsky and Nate Soares warn highlight this in their recent book: “If Anyone Builds it, Everyone Dies; Why Superhuman AI Would Kill Us All”.

Follow our newsletter!

Keep up with industry trends and get interesting reads like this one 1x per month into your inbox.

Learn more about Tepsivo

We deliver modern PV solutions to fulfill your regulatory needs using less resources. See how we do it >

0 Comments